Given we’re now in lockdown, we thought it’d be useful to gather together and summarise all the bits of work that we’ve been doing to determine whether and to what extent they can be used in our future mundane caravan experience. The following is a quick run through.

Gesture recognition

We now have a browser-based training environment that allows us to train a convolutional network to recognise gestures. To train a model, a user issues voice commands to the browser. So for example “record thumbs up” will start a webcam recording and categorise all gestures as “thumbs up”. Once a set of gestures have been recorded, the user can issue a “train” command, which will then build a first version of the model. Once built the user can test it in the browser (video is overlaid with the gesture and probability score). A user can record more examples of gestures to improve accuracy. If and when the model is sufficiently accurate it can be copied to a raspberry pi, and used with a pi camera to trigger events through gestures.

Testing has shown that, assuming no change to the background environment, with enough training the model behaves reasonably well (i.e. not too many incorrect gestures). It is considerably (unsurprisingly?) less accurate when the background field of view is different from the one used in training. We expect to be able to deal with this with further training inside the caravan. Time taken to train and retrain the model is about 10 to 15 minutes. We have not yet tested to find out how well the model will work with pairs/groups of people.

Neopixels

These are metre long strips of individually addressable LEDs that can be chained together with more strips (or cut into smaller pieces). We have all of these running off a small microcontroller (Adafruit Feather M0), controlled over MQTT. Pretty effective for indicating the flow of data and surprisingly bright!

Traffic analysis

To add realism to our experience it may be worth presenting actual traffic from participants’ devices when they in the caravan. We’ve been running tests on the QNAP NAS box / home router. We are sniffing all traffic going off the box and running a geo location search on ip addresses. It can provide a nice semi-realtime overview of where traffic from one or more devices is going to. We’ve attached this to the neopixels strip to provide a simple realtime display (one which presents the flags of the country that most of the traffic is going to). .

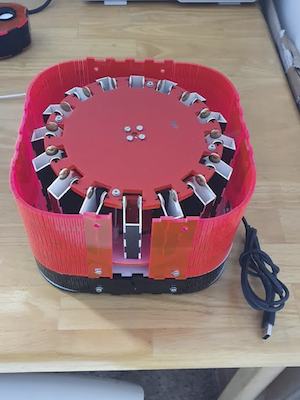

RFID devices

This was an experiment in using simple physical tokens to control a network. We have built an app that programs RFID stickers and tags (currently tags them with device macaddress) using mobile phone’s NFC chip, and a little device that that accepts little pots of RFID tags and reads them. The idea is to map tokens to devices, and to use these tokens as easy way to control and interrogate arbitrary groups of devices (e.g. all devices used to consume media, or all of Sarah’s devices etc.)